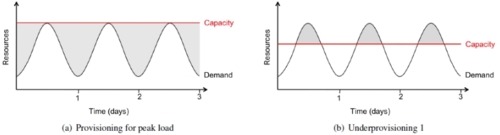

Lets start with a basic scenario where there is a sudden peak in the demand for an application service as the amount of clients’ requests increase. This event leads to a direct and immediate impact on the load placed on the web servers that host the service. In the traditional world, the number of servers is fixed, therefore an overload adversely affects the application performance and the service may slow down or even be terminated. The IT team would want to restore the environment functionality and bring the service up as soon as possible. The immediate impact of such an event on the business can be devastating. Starting with this simple understanding, we can move into the world of cloud computing use including resources consumption, while relating to the key differences between the traditional data center and today’s cloud technologies.

James Hamilton, Vice President at Amazon Web Services, makes the following observations in regarding data center operations costs, servers and power:

James Hamilton, Vice President at Amazon Web Services, makes the following observations in regarding data center operations costs, servers and power:

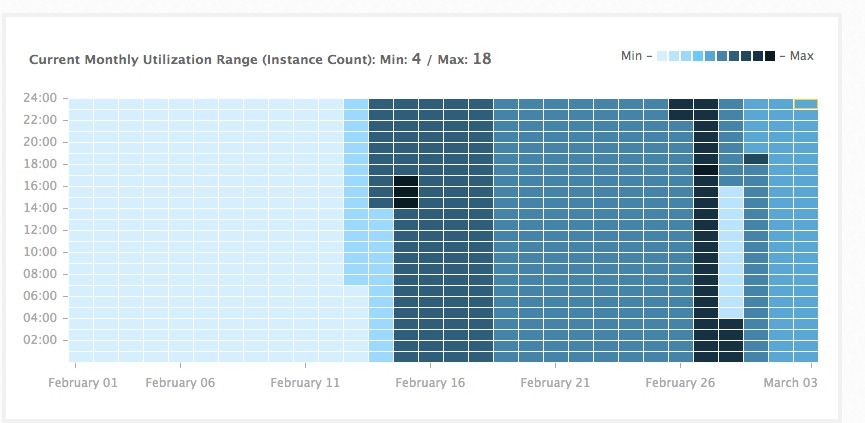

“Resource Consumption Shaping is an idea that Dave Treadwell and I came up with last year. The core observation is that service resource consumption is cyclical. We typically pay for near peak consumption and yet frequently are consuming far below this peak. For example, network egress is typically charged at the 95th percentile of peak consumption over a month and yet the real consumption is highly sinusoidal and frequently far below this charged for rate. Substantial savings can be realized by smoothing the resource consumption.”

“The resource-shaping techniques we’re discussing here, that of smoothing spikes by knocking off peaks and filling valleys, applies to all data center resources. We have to buy servers to meet the highest load requirements. If we knock off peaks and fill valleys, less servers are needed. This also applies also to internal networking. In fact, Resource Shaping as a technique applies to all resources across the data center. The only difference is the varying complexity of scheduling the consumption of these different resources.”

Read more on “Perspectives”, Hamilton’s blog.

Source: UC Berkeley report

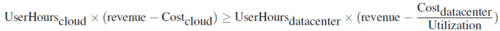

The simple equation above clearly demonstrates the benefit of the cloud costs and agility in comparison to the traditional data center. Basically, if the data center is 100% utilized the two sides of the equation are equal.

“The key observation is that Cloud Computing’s elasticity ability, to add or remove resources in minutes (using automated scaling) rather than days or weeks (the case for the traditional IT organizations) allows matching resources to the demand.”

“Amazing is the fact that 11.8 million servers in the USA in 2007 where most of those machines runs at 15% capacity or less (reported by Computer Worlds magazine) . Real world estimates of server utilization in the traditional DC range from 5% to 20%. “

To learn more check out the UC Berkeley report (by EECS Department)

The cloud providers strive to keep their cloud’s servers fully utilized and running all the time. Hamilton notes that turning off a server is not as economically efficient as using the server fully at all times,

“it works out better financially if the servers running at all times, and are fully utilized by Amazon AWS paying customers”, he says.

The cloud elasticity feature enables cloud consumers to purchase on-demand resources, this basic truth led Amazon to present its spot instances: Customers can bid on unused Amazon EC2 capacity and run those instances for as long as their bid exceeds the current Spot Price. The Spot Price changes periodically based on supply and demand, and customers whose bids exceeds it gain access to the available Spot Instances. Presenting this option, Amazon actually encourages the consumption of unused resources thereby decreasing the amount of their unused resources capacity. Providing the option to reserve an AWS EC2 instance for the whole year, has the same impact on Amazon AWS cloud utilization.

The following example presented in UC Berkeley report, demonstrates the severe business impact of an unstable hosting environment – which the cloud solves:

Suppose but 10% of users who receive poor service due to under provisioning are “permanently lost” opportunities, i.e. users who would have remained regular visitors with a better experience. The site is initially provisioned to handle an expected peak of 400,000 users (1000 users per server # 400 servers), but unexpected positive press drives 500,000 users in the first hour. Of the 100,000 who are turned away or receive bad service, by our assumption 10,000 of them are permanently lost, leaving an active user base of 390,000. The next hour sees 250,000 new unique users. The first 10,000 do fine, but the site is still over capacity by 240,000 users. This results in 24,000 additional defections, leaving 376,000 permanent users. If this pattern continues, after lg 500000 or 19 hours, the number of new users will approach zero and the site will be at capacity in steady state. Clearly, the service operator has collected less than 400,000 users’ worth of steady revenue during those 19 hours, however, again illustrating the under-utilization argument —to say nothing of the bad reputation from the disgruntled users.

When Animoto made its service available via Facebook, it experienced a demand surge that resulted in growing from 50 servers to 3500 servers in three days. Even if the average utilization of each server was low, no one could have foreseen that resource needs would suddenly double every 12 hours for 3 days.

Together with its huge benefits, the cloud brings the need for additional costs and new IT skills for its consumers including the need for “do it yourself” investment to make sure that the cloud building blocks are used and composed correctly to fit the service’s exact needs and to support operation aspects such as availability and security.